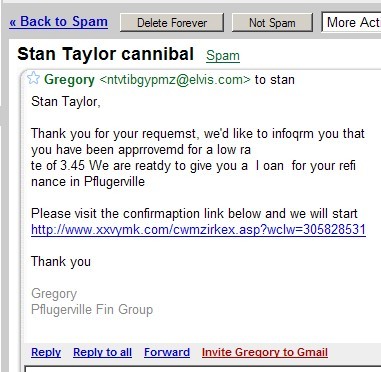

Stan Taylor cannibal

While scanning my spam folder this morning before deleting the 64 messages that came in overnight, this one caught my eye:

By the way, Gmail’s spam filter is great: of the 150-200 spam messages that I receive each day, only about half a dozen don’t get filtered into my spam folder, and the false positive rate (good email that gets incorrectly identified as spam) is very low, maybe one message every few weeks.